The Ys of Unit tests - Making sense of a good practice

Part1: Why testing? The claim, falsifiability and verifiability

We Software Engineers identify a problem that is lurking somewhere out there and needs to be solved, model that problem, find the optimal solution build a Software system around that optimal solution, then we ship it.

And finger crossed🤞 it is on production working just fine, we go back to bed and sleep like a log.

And they all hugged each other tightly, the little ones knew that they had learned an important lesson today: that with kindness, cooperation, and a little bit of magic, anything was possible.

Well, Thermodynamics begs to differ!

Fairy tail, isn’t it? Exactly! That is exactly how NOT it works in Software Engineering and in our current version of the universe unfortunately. Requirements change, things fail, crashes happen and sometimes due to random beam of cosmic radiation a CPU unit starts yielding 1+1=3 in one of the Kubernetes cluster where your system is running.

While unit test won’t solve everything, and it certainly won’t protect your software against random cosmic beams. They are however very good at proving claims about your software. When we ship something to the production environment we are effectively shipping a package of claims. Our software product is claiming that it does x y and z, and those claims follow a kind of hierarchical structure (depending on your architecture). For example, if you are using a layered architecture in your software; each of these layers call a method from the next layer. Each method-call in every one of those layers is doing something or rather claiming that it does something and here where the beauty of unit testing shines. If you are verifying each of those methods-(units)-call in each of those layers in isolation you are effectively verifying that those aren’t claims but rather facts! (will be back to that later) And thus, you find any problem early on (early feedback). And in case anything changes (the claim change) unit test would fail (because that unit-test has to verify something else) and would bring that change or the effect of that change to your and your team’s attention.

That would be simplifying it too much but let’s discuss the topic of whys of unit tests point by point. I am hoping that by the end of this series articles a clear understanding or rather clear comprehension would form in the mind of the reader and any confusions would be cleared out and any counter arguments to unit testing as a general practice would be defeated.

While the aim of these articles is not to defeat arguments per se but rather to exactly making sense of a good practice. It’s always good to question things so don’t worry, preventing raising question is never the purpose. So let’s start by building the reasoning behind the practice of unit test step by step.

We will try in this series to only talk about the whys but not the whats or the hows; but sometimes in order to talk about the whys you might need to tackle some topics in the whats category. We are going to try to avoid that but let’s see how it goes.

The Scientific Method “Falsifiability and Verifiability”

Why testing right? Why bother?

Is your method doing what it really says is doing? How do you know that?

Let me guess!

You know that because you wrote it yourself right?!! That’s not really how it works unfortunately. There has to be a way to make sure that your method is doing what it actually says/claim is doing. We need to prove that scientifically somehow. How do we prove, that something is right/wrong true/false? Doubt! We first start by doubting that our code might not be doing what it says is doing. I know it’s sound very philosophical but bear with me and think about it for a minute! In the end philosophy is the study of the fundamental nature of knowledge, right? I promise, I won’t go into too much philosophy but let’s talk about the doubt which reminds me of a very famous philosopher René Descartes:

René Descartes, the originator of Cartesian doubt, put all beliefs, ideas, thoughts, and matter in doubt. He showed that his grounds, or reasoning, for any knowledge could just as well be false. Sensory experience, the primary mode of knowledge, is often erroneous and therefore must be doubted

A careful reader: Good, we started with doubts and now we have doubts, ok good. So now I have no idea if my code is really working all the times and that it does what it said is doing all the times, what should I do?

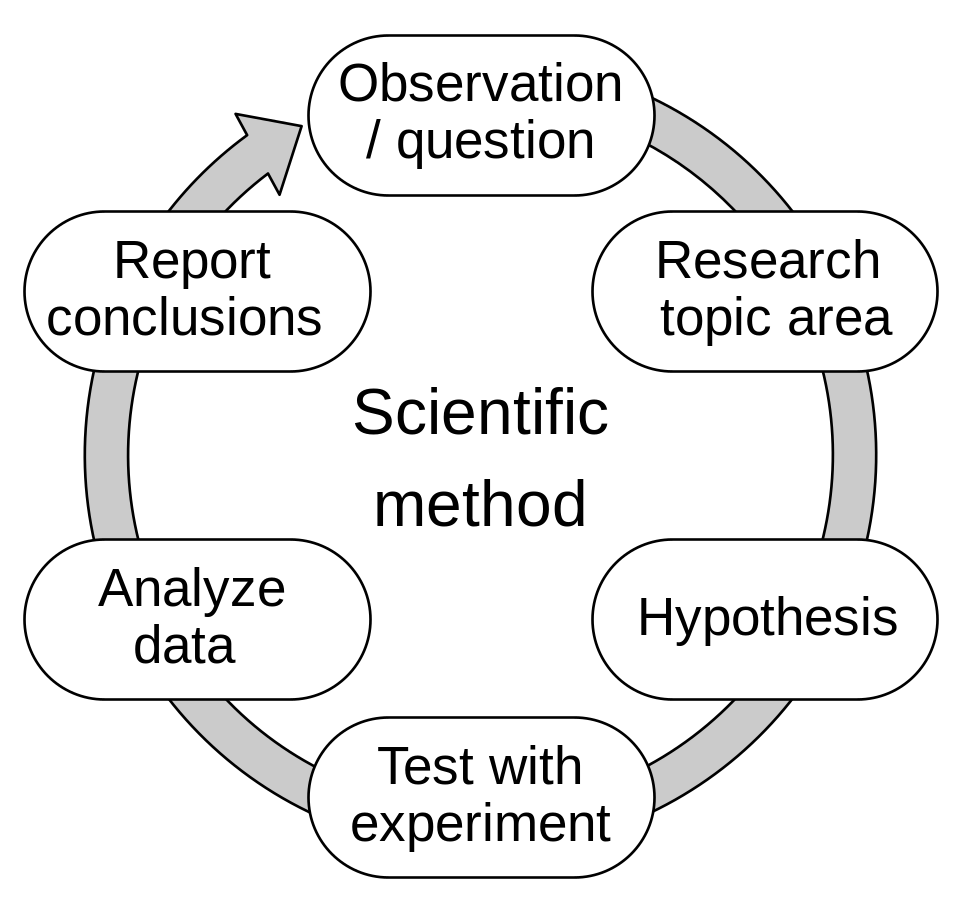

Don’t worry, I am certainly not going to leave you for doubts. But that was very essential step to move our knowledge back to the observation stage and now we can follow the scientific method to prove our observation that our code is doing alright all the times or that unit/method claim is correct.

Let’s take as an example the statement “There are yellow cats that looks like Pikachu from the Pokémon” (Damn they are cute! 😱)

In order to prove my statement above, my statement must be both falsifiable and verifiable. What do I mean by that? OK, imagine the following conversation:

A careful reader: Prove your above statement that such cute thing exist

Claim holder: Only Software Engineer can see them

A careful reader: My friend is a Software Engineer I can ask him

Claim holder: Only Software Engineer who knows C# and C++ and can code in both of them can see them

A careful reader: My friend knows someone who can code in both C# and C++ I can ask them

Claim holder: Only Software Engineer who knows C# and C++ and who born in 31 of Feburary 1999 can see them.

As we can see from above conversation there is no way to disprove that hypothesis or claim so it’s meaningless to doubt it and therefore there is no way to prove it.

It is like receiving a PR comment by a colleague about a service class, why would that not be unit tested, and then you reply to the comment “It just WORX, source is me” 🤣

Falsifiability is a deductive standard of evaluation of scientific theories and hypotheses, introduced by the philosopher of science Karl Popper in his book The Logic of Scientific Discovery (1934).

A theory or hypothesis is falsifiable (or refutable) if it can be logically contradicted by an empirical test.

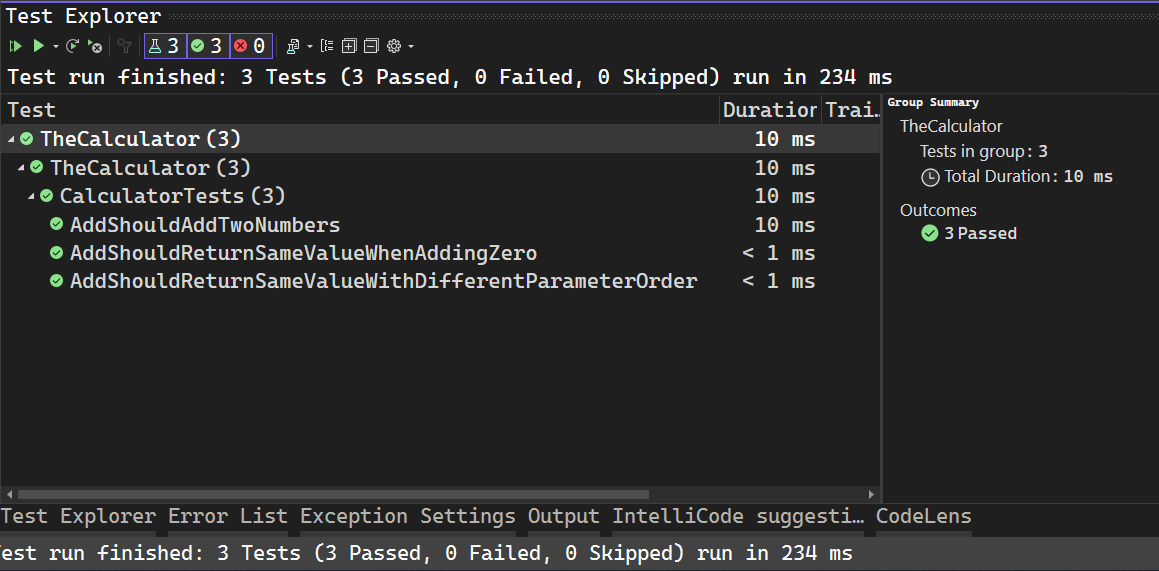

So the falsifiability is the ability to prove that a hypothesis or claim is false. How can we do that in terms of code? A test. In the unit test realm we make tests that can both pass when the assertions are met “verifiable” and can fail when the assertions aren’t met “falsifiable”. But wait! Some code out there aren’t even testable (remember statement above that cannot be disproven right?) so that follows that we as Software Engineer must strive to write a code that’s at least testable (falsifiable).

Let’s conclude this point:

When we write code, unless it’s tested, it’s a plain claim

In order to verify that the code is doing what it says is doing that code must be testable falsifiable

The way we do that is by creating a unit test with comprehensive assertions that assert that everything that the code is doing is actually done properly.

The unit tests we write must also be falsifiable that is, they must fail if the claim change (the code change to some extent)

That also applies to all kind of test we write (more on that on later parts)

Failure is not a possibility but rather inevitability

Why testing is your only guard that helps protecting your software

Like everything in the world we live in, nothing is perfect (not even nothing itself 🤷♂️). And that also applies to the software system we build. They are imperfect, because nothing really is perfect. Failure is an inevitability in software engineering because of the complexity of software systems and the continuous changes in requirements and technology. Given enough time, it’s guaranteed that any software system will eventually fail (hence the 99.999 availability or the five nines that everybody is striving for, you will never see a claim of 100 availability if you see that a system has a 100 availability; congrats! You are officially in the afterlife 🤝).

Software systems are inherently complex, consisting of many interdependent components that interact with each other in various ways. As a result even small changes in one part of the system can have unintended consequences elsewhere. In addition to that, software systems are often developed and maintained by large teams of developers each with their own understanding of the system making it challenging to ensure consistent quality and behavior.

Software development is a constantly evolving field, with new technologies, tools, and programming languages emerging all the time. This means that software engineers must constantly adapt and learn new skills to keep up with the latest developments. In addition, the requirements and expectations of software users are constantly changing, which means that software systems must also evolve to meet these changing needs.

Also there a lot of unexpected events that could bring down your software like a piece of cake 🎂. From hardware failure to (as we mentioned above) random beam of cosmic radioactive particles ⚡ hitting your CPU unit, to earthquakes, volcanos, asteroids, alien invasions (JK 😆). Basically natural disasters that you have no control over whatsoever. It will hit you hard the most especially if you do not have region redundancy.

Given all of the above factors combined it is inevitable that software systems will experience failures or defects at some point in their lifetime. Even with rigorous testing and quality assurance processes it is impossible to completely eliminate the possibility of failures or defects in a complex software system.

A careful reader: Well, how unit test or even testing would help me fight alien invasion or protect my software against nasty SEU1s causing, evil space-travelling highly active Muons?

Good question! As I said above and in the subtitle of this paragraph failure is inevitable, period. Hence the Thermodynamics2 joke. It’s inevitable that your software will eventually fail in a way or another, given enough time. But! That doesn’t mean there is nothing you can do about that. It’s the trick we use to fake-control entropy3 in our software, by doing comprehensive tests (and unit tests) you are making it more likely that predictable events would happen in your software (a.k.a good states) and as a result making it less likely that unpredictable behaviour or evens would happen (a.k.a bad states).

While this is not directly really related to unit tests but rather to testing in general. It however still apply as well, a change in one area of the code could affects other areas as well. Hence, if we covered all these areas in isolation with tests we would be able to receive a feedback in a form of a failing test especially if we build those tests with the possibility of failure (remember what we discussed about falsifiability and verifiability.)

Please note however a failure here isn’t necessarily a global region-wide outage in your cloud provider. A failure could be as simple as missing a simple parameter verification in the method that could have been detected with a simple unit test, a dependency’s method call that should have never been called under certain circumstance that also could have easily been prevented. Anyways we are going to talk more about that in details in later parts, so please stay tuned.

Let’s conclude this point as well:

We live in an imperfect universe, nothing is perfect (I know that’s an old boring cliché but it serves the purpose of this paragraph in this article)

That also include the software we write (daaah)

On top of that, the software we write tends to be very complicated, and the more the thing is complicated the easier it is to make mistakes or the easier for it to fail (ask Thermodynamics if you don’t believe me 😏)

Tests and especially unit testing protect our software systems against things, mistakes, errors, bugs that could have been easily detected via a unit test and the cherry on top is that they could easily be automated and integrated with our CI/CD pipeline (that is, each time anything change anywhere unit tests run)

You can quote me on this one:

Any software system will eventually fail given enough time.

Mahmoud Alsayed

I hope you enjoyed reading this part 1 of the series of article regarding unit tests. Please feel free to engage in the comment section and if you are reading from your email please feel free to visit the website and engage with others in the comment section and share your thoughts.

Until next time.

SEU or single event upset: it’s a phenomenon when literally a random high energy particle from the outer space hit an electronic device causing in some cases a permanent state-altering changes like flipping bits in a memory chips.

Thermodynamics: The study of heat and energy and their interactions in systems, there are four fundamental laws of Thermodynamics one of the most interesting one states that basically entropy goes up whether you like it or not.

Entropy: It’s the measurement of randomness in a system, Thermodynamics states that in an isolated system given enough time and no external influence the system will naturally move to higher entropy state a.k.a higher randomness or disorder.

This is how software engineering articles shoul be written - fun and educational.

Please continue! It is really interesting sight/experience interpreted through the prism of fundamental science!